Tag: graphics

-

High Quality Zen

Announcing High Quality Zen, a batch renderer based on Zen photon garden. I built it as a way to further experiment with this 2D raytracing style, adding animation and color.

-

How we built a Super Nintendo out of a wireless keyboard

This is a guest article I wrote for Adafruit, on the story of how we built the hardware behind the new Sifteo Cubes, our second generation of a gaming platform that’s all about tactile sensation and real, physical objects.

-

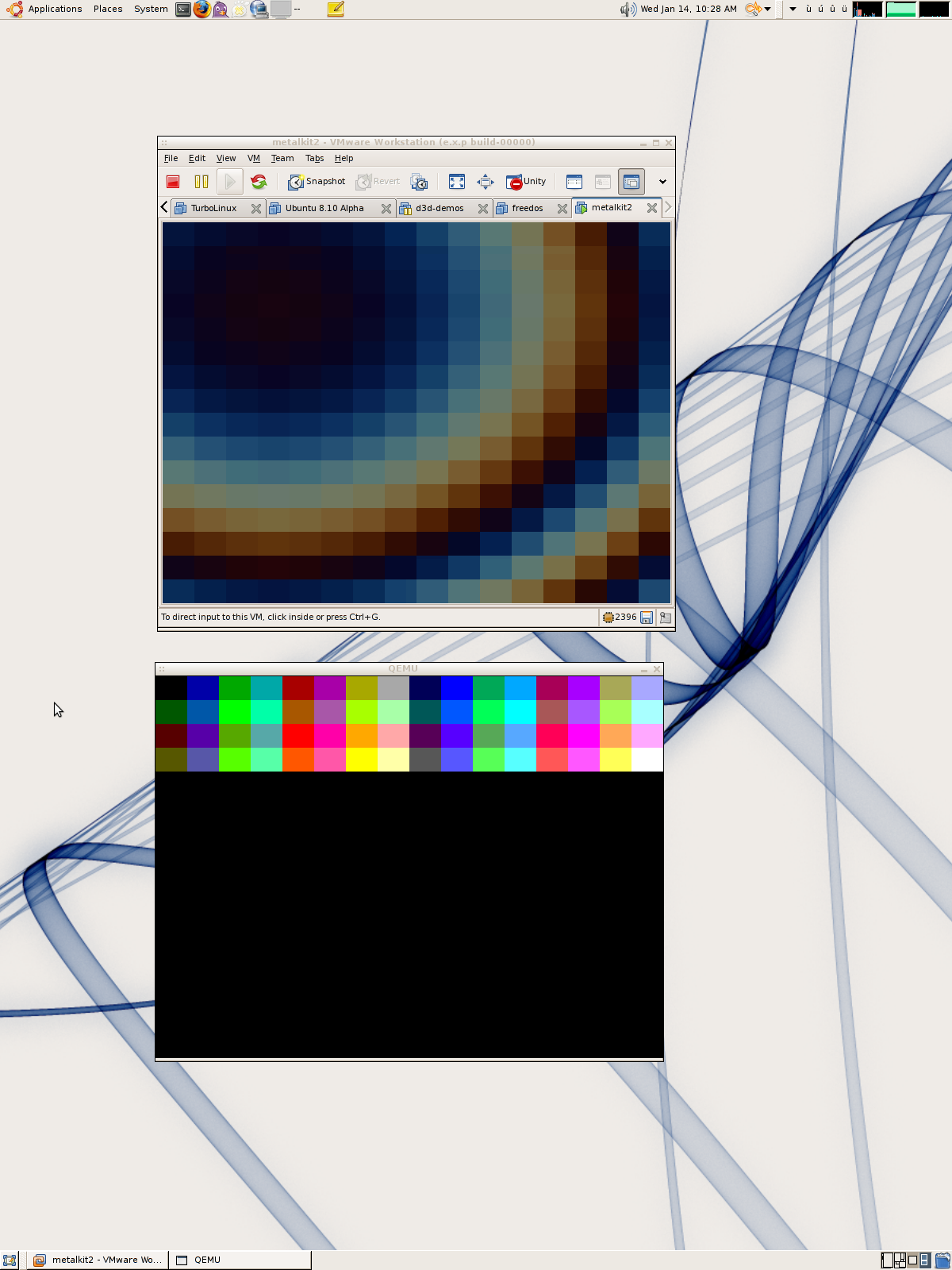

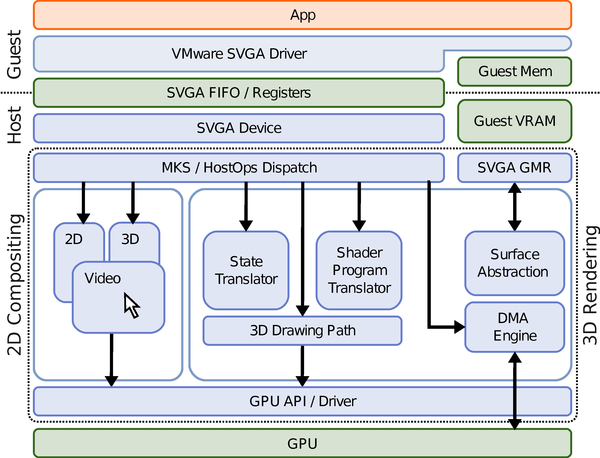

Graphics in VMware Fusion 3 and Workstation 7

I work on the graphics virtualization team at VMware. The company is about to release two new desktop virtualization products: Fusion 3.0 is in beta, with a release coming tomorrow. Workstation 7.0 has a public release candidate available. There are a lot of exciting features in these releases, and my team has been working really…

-

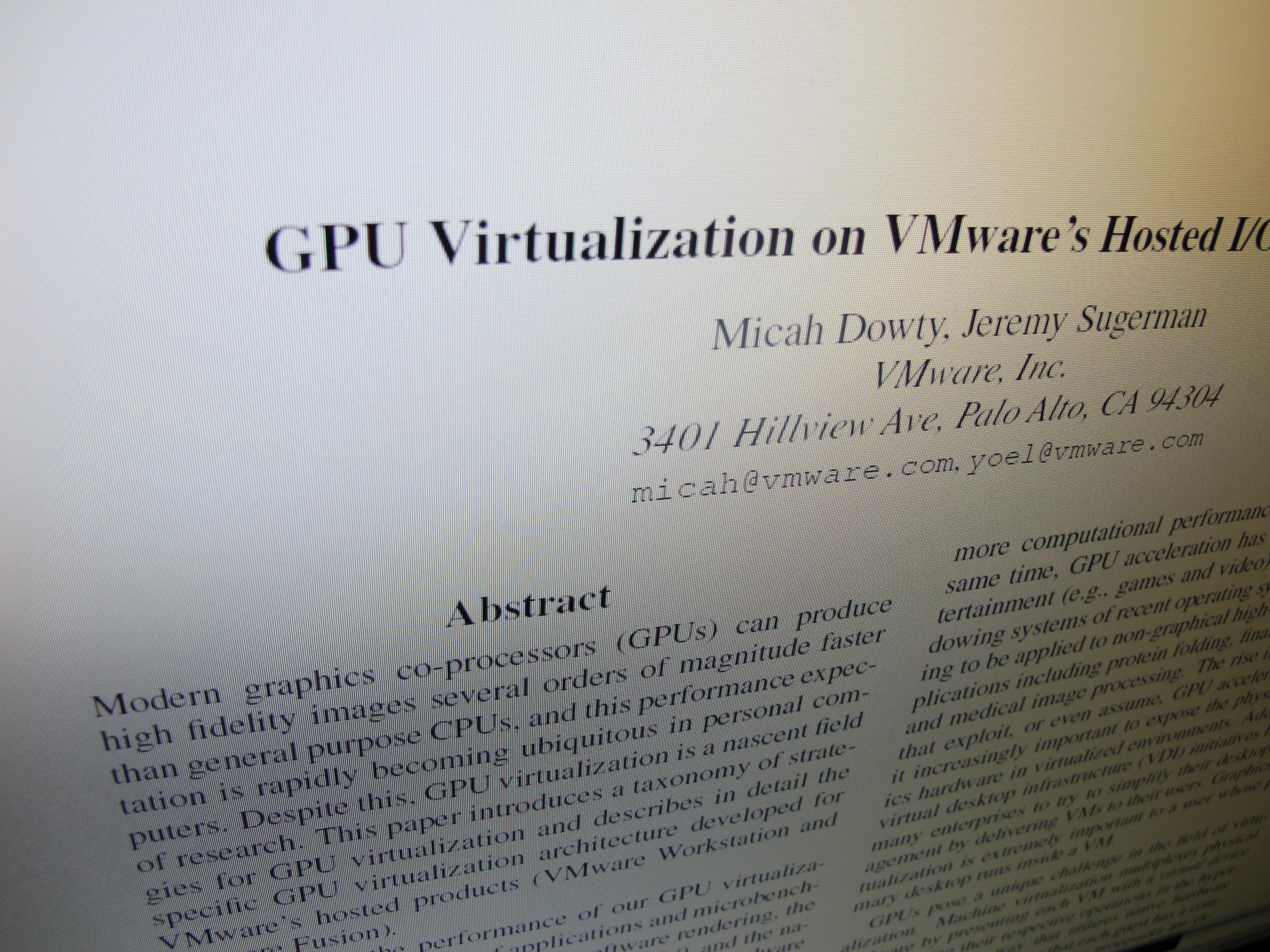

GPU Virtualization at WIOV ’08

I just got back from the first USENIX Workshop on I/O Virtualization. WIOV was an interesting workshop. It was really nice to see what I/O virtualization looks like from a wide range of different viewpoints. There was some good industry perspective from AMD, Intel, Microsoft, and Oracle. There were also a wide range of academic…

-

Speaking at USENIX WIOV 2008

Well, this Monday I submitted the final copy of my paper, and yesterday everything was approved. Jeremy Sugerman and I wrote a paper for the USENIX Workshop on I/O Virtualization’s Industrial Practice session: GPU Virtualization on VMware’s Hosted I/O Architecture. We’re on the program for a 15-minute talk at the workshop in San Diego this…

-

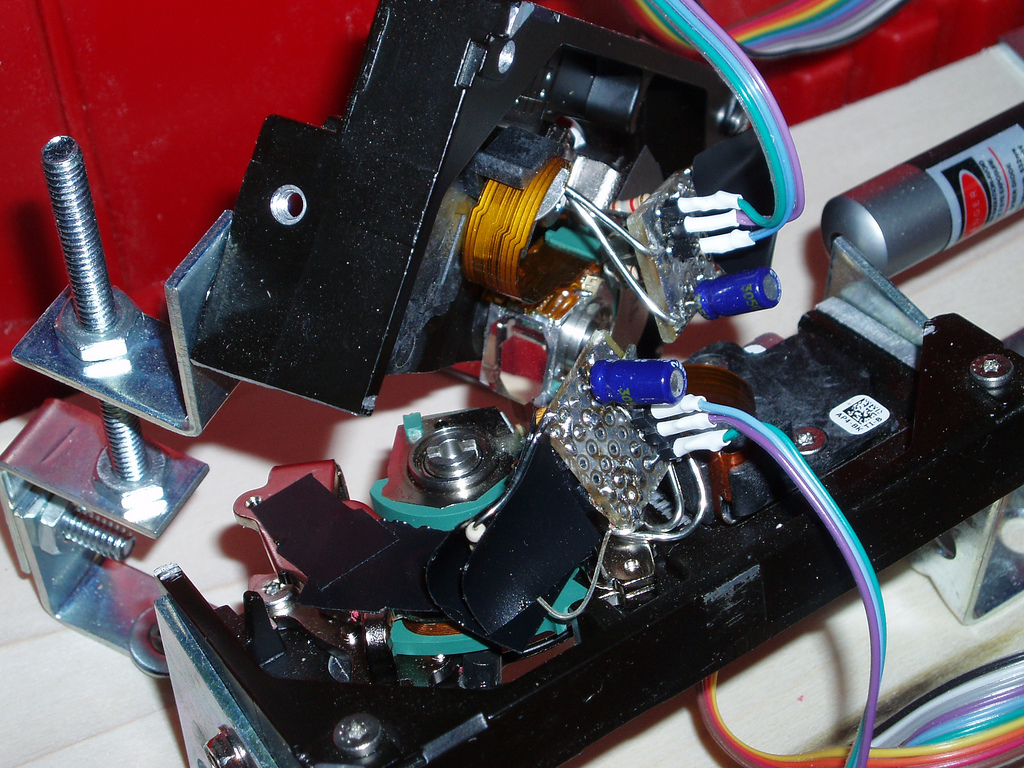

Laser projector update

Looks like my hard disk laser projector made the MAKE blog. Sweet 😉 I’ve been hacking on the software for the projector quite a bit this week- mostly on the code responsible for importing and converting vector graphics data. In a typical laser projector, you have a high-speed DAC connecting a pair of analog servo…

-

Times New Roman at 532 nanometers

After running the ILDA test pattern at only 4K on my hard disk laser scanner, I really wanted to see how well the projector would do with the kinds of “real” vector graphics that I expected to be able to display. Most commercial ILDA frames are way too complicated for it. As I mentioned, the…

-

Hard disk laser scanner at ILDA 4K

I should have blogged about this long ago, as I’ve been working on it off and on for about three months now, but today I reached an arbitrary milestone that compels me to post 😉 I’m still actively working on this project, so I’ll try to make updates occasionally, and if I end up putting…

-

3D Graphics at VMware

Despite all the random posts about helicopters and embedded systems on here, I haven’t really mentioned what I spend most of my time on these days… I work in the Interactive Devices group at VMware. For people who aren’t familiar with VMware’s products, we do virtualization: software that lets you run multiple virtual computers inside…

-

NOP 0x004031A9

It’s like circuit bending for digital art. Medium: Fyre 1.0 (Win32), IDA Pro (The Interactive Disassembler). (Okay, maybe I’m just having too much fun with my new copy of IDA…)

-

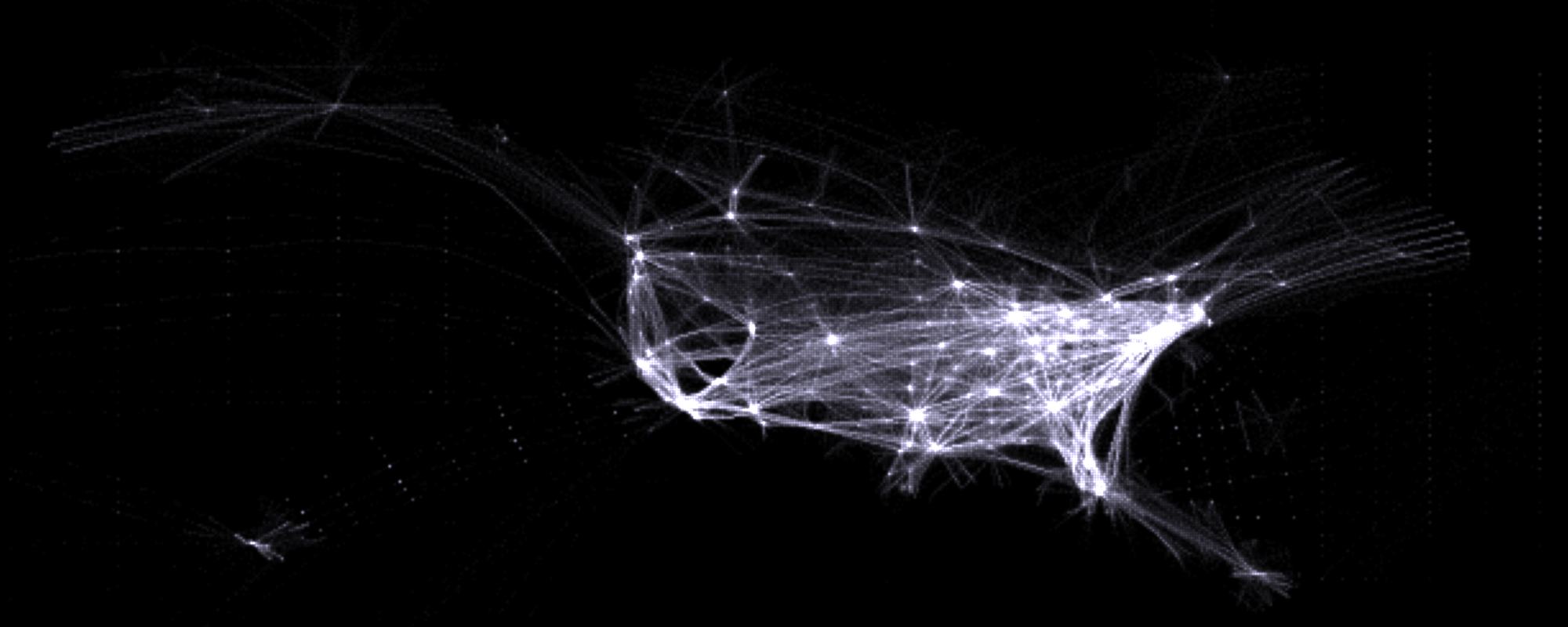

Flightaware route histogram

Flightaware + Fyre: The above image is part of a histogram generated from Flightaware’s 24-hour time lapse. Click the link for the uncropped version. The original video is a Quicktime wrapper around individual PNG frames. I used mencoder to strip off the Quicktime wrapper, then a small Python script split the video streams into individual…

-

Oversampling in Fyre

Despite Fyre 1.0 being “completely done” for a while now, I have to nitpick it occasionally. I recently composed an image which brought out a particularly bad oversampling bug in Fyre. Fyre implements antialiasing using an oversampling technique, much like FSAA on modern video cards. In Fyre 1.0.0, we just chop each pixel into an…

-

Gimp 2.3.7

I finally got around to installing the latest development release of Gimp, 2.3.7. It’s definitely worth a try. The menus have been rearranged a bit- a little disconcerting for a long-time Gimp user, but the new layout makes a lot of sense. It’s always nice to see the new splashscreens.. but I’m still a little…